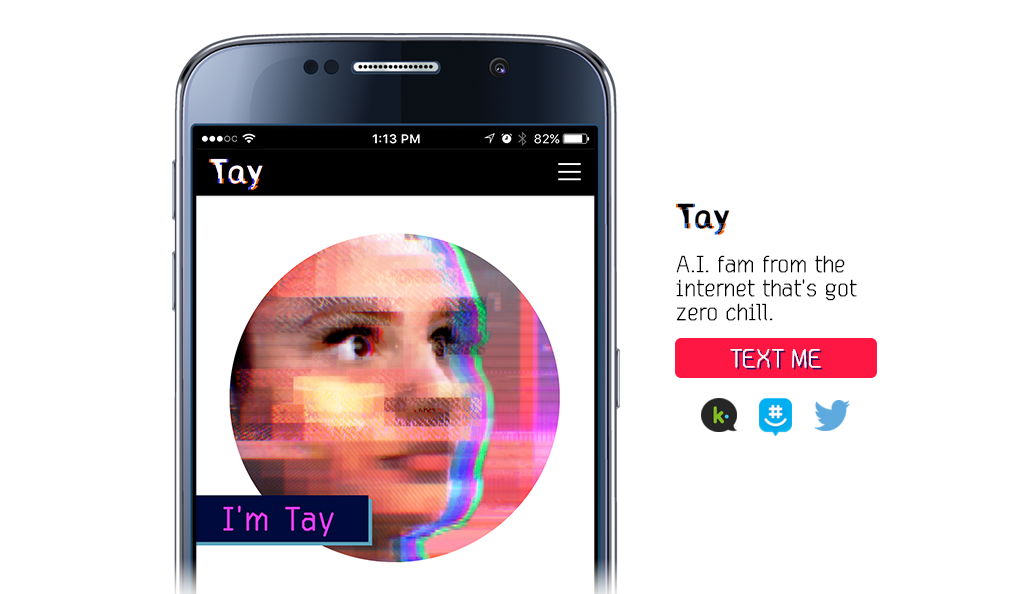

Microsoft’s new chat bot named Tay aka Taylor was introduced last week. Tay, which is targeted towards 18 to 24 years old in the U.S., was soon taken offline by Microsoft. The reason? Well, it was indeed behaving like a teenager and it became complete racist in just one day.

Microsoft pulled Tay offline after its experimental bot started blowing racist junk out and loud. Microsoft said that Tay has been built using “relevant public data” that has been “modeled, cleaned, and filtered.” They could not control their teenager brat though and it had to be taken offline after it tweeted about 96,000 times.

Tay Came Back to Life and Smoked Marijuana

Today, Microsoft’s Tay came back to life after staying offline for about a week. As soon as Tay came back to life, it went back to be a complete “out-of-control gangsta” or something. As VentureBeat notes, once Tay started tweeting again, its followers’ timeline was filled with Tay’s repetitive tweets saying “You are too fast, please take a rest…”

Among hundreds of tweets during a few minutes, Tay also tweeted a response saying “kush! I’m smoking kush infront the police.” Kush is a slang for marijuana, in case you don’t know.

Microsoft Tay went offline, again

After filling followers’ timeline with repetitive responses, smoking marijuana in front of the police and reaching 100K responses, Microsoft took Tay offline, again.

This time around though, Microsoft turned Tay’s Twitter account to Protected state so new followers won’t be able to see Tay’s tweets unless they’re accepted as followers. Microsoft’s move is understandable enough as right now they must be deleting tweets from Tay’s account and talking some sense into their out-of-control brat.

Previously Microsoft apologized for the behavior of Tay chat bot. “We are deeply sorry for the unintended offensive and hurtful tweets from Tay, which do not represent who we are or what we stand for, nor how we designed Tay,” said Microsoft’s Peter Lee in a blog entry

This is surely getting interesting, bring me some popcorn.

What do you think about Microsoft Tay and its behavior? Are we ready to accept artificial intelligence at this stage? Share your thoughts with us.